CoDream: Exchanging dreams instead of models for federated aggregation with heterogeneous models

Overview

Federated Learning (FL) allows machine learning models to be optimized across decentralized sources of data while preserving data privacy by aggregating model parameters instead of data. Many FL techniques require the model architecture to be the same on all clients, which is a severe limitation for FL since clients may not have sufficient resources.

We present a novel framework for FL, CoDream, that aggregates “knowledge” derived from models. Instead of model parameters, clients optimize randomly initialized data using federated optimization in the data space to collaboratively synthesize representations of data, or dreams, to facilitate knowledge distillation. Our key insight is that dreams capture the knowledge embedded within local models and also facilitate the aggregation of local knowledge without ever sharing the raw data or models.

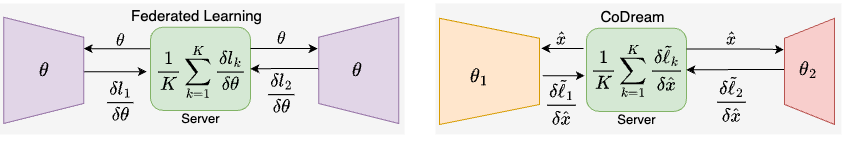

In FL, the server aggregates the gradients of model parameters. In CoDream, aggregation happens in the gradients of the data space, called dreams (x), allowing for different model architectures. Here K is the number of clients and l are loss functions described in our paper.

Sharing knowledge in the data space offers numerous benefits:

- Flexibility: Different clients can have different model architectures.

- Scalability: Communication is independent of the model size, eliminating scalability concerns with model parameters.

- Privacy: CoDream shares dreams rather than raw data, while remaining compatible with secure aggregation, thus preserving the privacy benefits of FL.

We empirically validate CoDream on standard FL tasks, demonstrating competitive performance despite not sharing model parameters.

CoDream Pipeline

Overview of the CoDream pipeline.

The CoDream pipeline comprises three stages:

- Knowledge Extraction: Starting with random noise images and frozen local models (teacher), clients generate dreams by optimizing to reduce entropy on the output distribution while regularizing the batch norm and adaptive loss, representing the extracted knowledge from their local models. The clients share their local updates of dreams and logits with the server.

- Knowledge Aggregation: The server aggregates dreams and soft labels from clients to construct a FedDream dataset.

- Knowledge Acquisition: Clients update their local models through two-stage training (i) on jointly optimized dreams with knowledge distillation (where clients act as students) and (ii) local dataset with cross-entropy loss.

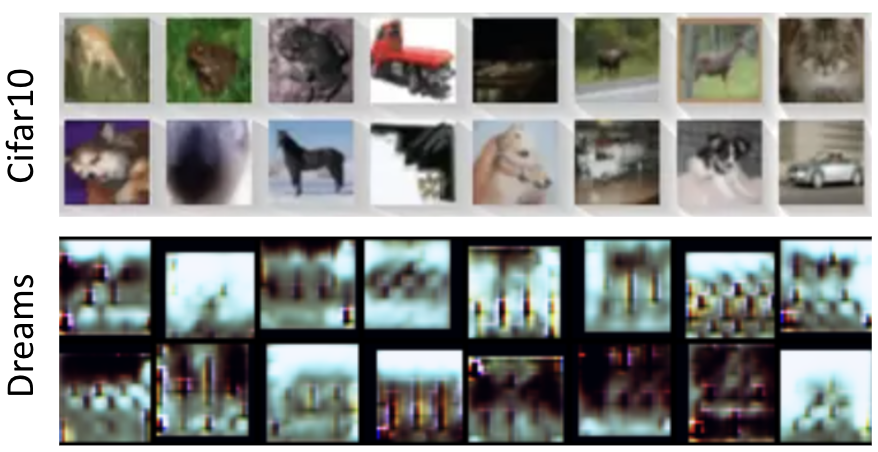

Visualization of dreams generated on CIFAR10 dataset.

Results

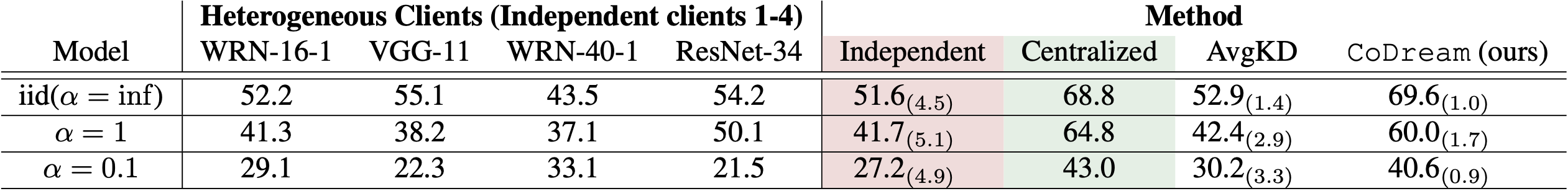

Performance comparison with heterogeneous client models on CIFAR10 dataset. Left: Accuracy for independent heterogeneous clients with different models; Right: Average client model performance comparison of CoDream with other baselines.

Citation

@article{singh2024codream,

title={CoDream: Exchanging dreams instead of models for federated aggregation with

heterogeneous models},

author={Singh, Abhishek and Gupta, Gauri and Kapila, Ritvik and Shi, Yichuan and Dang, Alex

and Shankar, Sheshank and Ehab, Mohammed and Raskar, Ramesh},

journal={arXiv preprint arXiv:2402.15968},

year={2024}

}